We’re excited to share the most recent options and efficiency enhancements that make Databricks SQL easier, quicker, and extra inexpensive than ever. Databricks SQL is an clever knowledge warehouse inside the Databricks Information Intelligence Platform and is constructed on the lakehouse structure. The truth is, Databricks SQL has over 8,000 prospects right this moment!

On this weblog, we are going to share particulars for AI/BI, clever experiences, and predictive optimizations. We even have highly effective new value/efficiency capabilities. We hope you want our revolutionary options from the final three months.

AI/BI

Since launching AI/BI at Information and Analytics Summit 2024 (DAIS), we’ve added many thrilling new enhancements. If you happen to’ve not but tried AI/BI, you’re lacking out. It’s included for all Databricks SQL prospects to make use of with out the necessity for added licenses. AI/BI is a brand new kind of AI-first enterprise intelligence product, native to Databricks SQL and constructed to democratize analytics and insights for everybody in your group.

In case you missed it, we simply revealed a What’s New in AI/BI Dashboards for Fall 2024 weblog highlighting plenty of new options like a brand new Dashboard Genie, multi-page reviews, interactive level maps and extra. These capabilities add to a protracted checklist of enhancements we’ve added because the summer time, together with next-level interactivity, the power to share dashboards past the Databricks workspace, and dashboard embedding. For AI/BI Genie, we’ve been centered on serving to you construct belief within the solutions it generates via Genie benchmarks and request a evaluate.

Keep tuned for much more new options this 12 months! The AI/BI launch notes present extra particulars.

Clever experiences

We’re infusing ML and AI all through our merchandise as a result of automation helps you give attention to higher-value-added work. The intelligence additionally helps you democratize entry to knowledge and AI with built-in pure language experiences constructed to your particular enterprise and in your particular knowledge.

SQL improvement will get a lift

We get it–SQL is your greatest good friend. Test this out–a brand new SQL editor to mix one of the best elements of the platform right into a unified and streamlined SQL authoring expertise. It additionally provides a number of improved options, together with a number of assertion outcomes, real-time collaboration, enhanced Databricks Assistant integrations, and editor productiveness options to take your SQL improvement to the subsequent stage. Study extra in regards to the new SQL editor.

Now we have additionally made extra enhancements that can assist you assemble your SQL, akin to utilizing named parameter marker syntax (throughout the SQL editor, notebooks, and AI/BI dashboards).

AI-generated feedback

Properly-commented SQL is important for collaboration and maintainability. As a substitute of ranging from scratch, you should use AI-generated feedback for catalogs, schemas, volumes, fashions, and capabilities. You may even use Assistant for inline chat to assist edit your feedback.

New options and enhancements

Lastly, we’ve got a protracted checklist of smaller enhancements that may make your expertise smoother. For that in depth checklist, examine the Databricks SQL Launch Notes.

Predictive optimization of your platform

We’re repeatedly striving to optimize all your workloads. One technique is to make use of AI/ML to deal with some particulars for you routinely. Now we have a couple of new options for you.

Automated statistics

Question planning will get smarter by utilizing statistics, however that requires you to know how you can run the ANALYZE command. Nevertheless, fewer than 5% of shoppers run ANALYZE. And, as a result of tables can have tons of of columns (or extra) and question patterns change over time, chances are you’ll need assistance optimally working workloads.

Particularly, you will have these conditions:

- Information Engineers should handle “optimization” jobs to keep up statistics

- Information Engineers have to find out which tables have to have statistics up to date and the way typically

- Information Engineers have to make sure that the important thing columns are within the first 32

- Information Engineers should probably rebuild tables if question patterns change or new columns are added

With the introduction of Automated Statistics, Databricks now manages optimization workloads and statistics assortment for you. Through the use of Automated Statistics, the gathering of statistics throughout ingest is considerably extra environment friendly than working a standalone ANALYZE command. Additionally, with the predictive optimization system tables, you have got the observability to trace the fee and reliability of the service.

Question profiler

We additionally launched new capabilities for the question historical past and profiler, which can be found in Personal Preview. Databricks SQL materialized views and streaming tables now have higher plans and question insights.

Question Historical past and Question Profile now cowl queries executed via a DLT pipeline. Furthermore, question insights for Databricks SQL materialized views (MVs), and streaming tables (STs) have been improved. These queries may be discovered on the Question Historical past web page alongside queries executed on SQL Warehouses and Serverless Compute. They’re additionally listed within the context of the Pipeline UI, Notebooks, and the SQL editor.

World-class value/efficiency

The question engine continues to be optimized to scale compute prices with close to linearity to knowledge quantity. Our purpose is ever-better efficiency in a world of ever-increasing concurrency–with ever-decreasing latency.

Efficiency updates

Prior to now 5 months, we even have launched new developments in Databricks SQL that improve efficiency and cut back your complete value of possession (TCO). We perceive that efficiency is paramount for delivering a seamless person expertise and optimizing prices. At Information and AI Summit 2024 (DAIS), we introduced that we had improved efficiency for a similar interactive BI queries by 73% since Databricks SQL’s launch in 2022. That’s 4x quicker! A little bit over 5 months later, we’re completely happy to announce that we at the moment are 77% quicker, as calculated by the Databricks Efficiency Index (DPI)!

These aren’t simply benchmarks. We monitor tens of millions of actual buyer queries that run repeatedly over time. Analyzing these comparable workloads permits us to watch a 77% velocity enchancment, reflecting the cumulative impression of our continued optimizations.

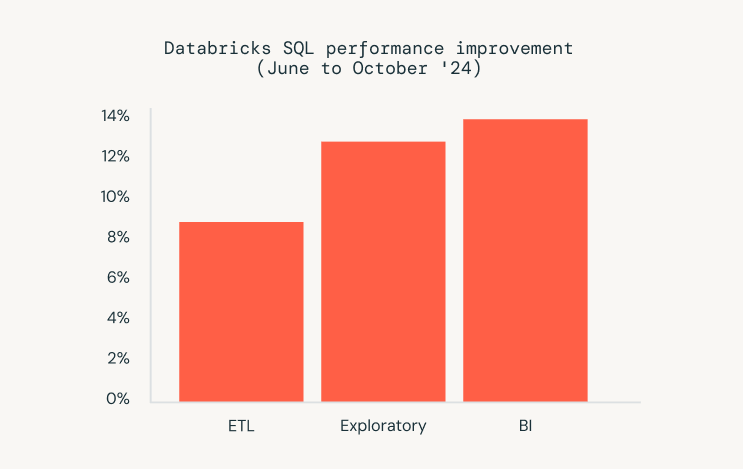

Teaser alert: Now we have additionally made Extract, Rework, and Load (ETL) workloads 9% extra environment friendly, BI workloads 14% extra performant, and exploratory workloads 13% quicker. Try the efficiency updates weblog for particulars.

System tables

System tables are the beneficial option to observe important particulars about your Databricks account, together with value info, knowledge entry, workload efficiency and extra. Particularly, they’re Databricks-owned tables that you could entry from a wide range of surfaces, often with low latency.

The Databricks system tables platform is now usually obtainable, together with system.billing.utilization, and system.billing.list_price tables. The billing schema is enabled routinely for each metastore. The billing system tables will stay obtainable at no extra value throughout clouds, together with one 12 months of free retention.

Study how you can monitor utilization with system tables.

Databricks SQL Serverless warehouses

We proceed increasing availability, compliance, and extra for our Databricks SQL Serverless warehouses. Databricks SQL Warehouses are serverless warehouses with instantaneous and elastic compute (decoupled from storage). The compute is managed by Databricks.

- New areas:

- Google Cloud Platform (GCP) is offered throughout the present seven areas.

- AWS provides the eu-west-2 area for London.

- Azure provides 4 areas for France Central, Sweden Central, Germany West Central, and UAE North.

- HIPAA: HIPAA compliance is offered in all areas and all clouds (Azure, AWS, and GCP). HIPAA compliance was additionally added to AWS us-east-1 and ap-southeast-2.

- Personal Hyperlink: Personal hyperlink helps you utilize a non-public community out of your customers to your knowledge and again once more. It’s now usually obtainable.

- Safe Egress: Configure egress controls in your community. Safe egress is now obtainable in Public Preview.

- Compliance safety profile: Help for serverless SQL warehouses with the compliance safety profile is now obtainable. In areas the place this characteristic is supported, workspaces enabled for the compliance safety profile now use serverless SQL warehouses as their default warehouse kind. See which computing assets get enhanced safety and serverless computing characteristic availability.

- Serverless default: Starter warehouses at the moment are serverless by default. This setting change helps you get began rapidly as an alternative of ready for IT to provision assets.

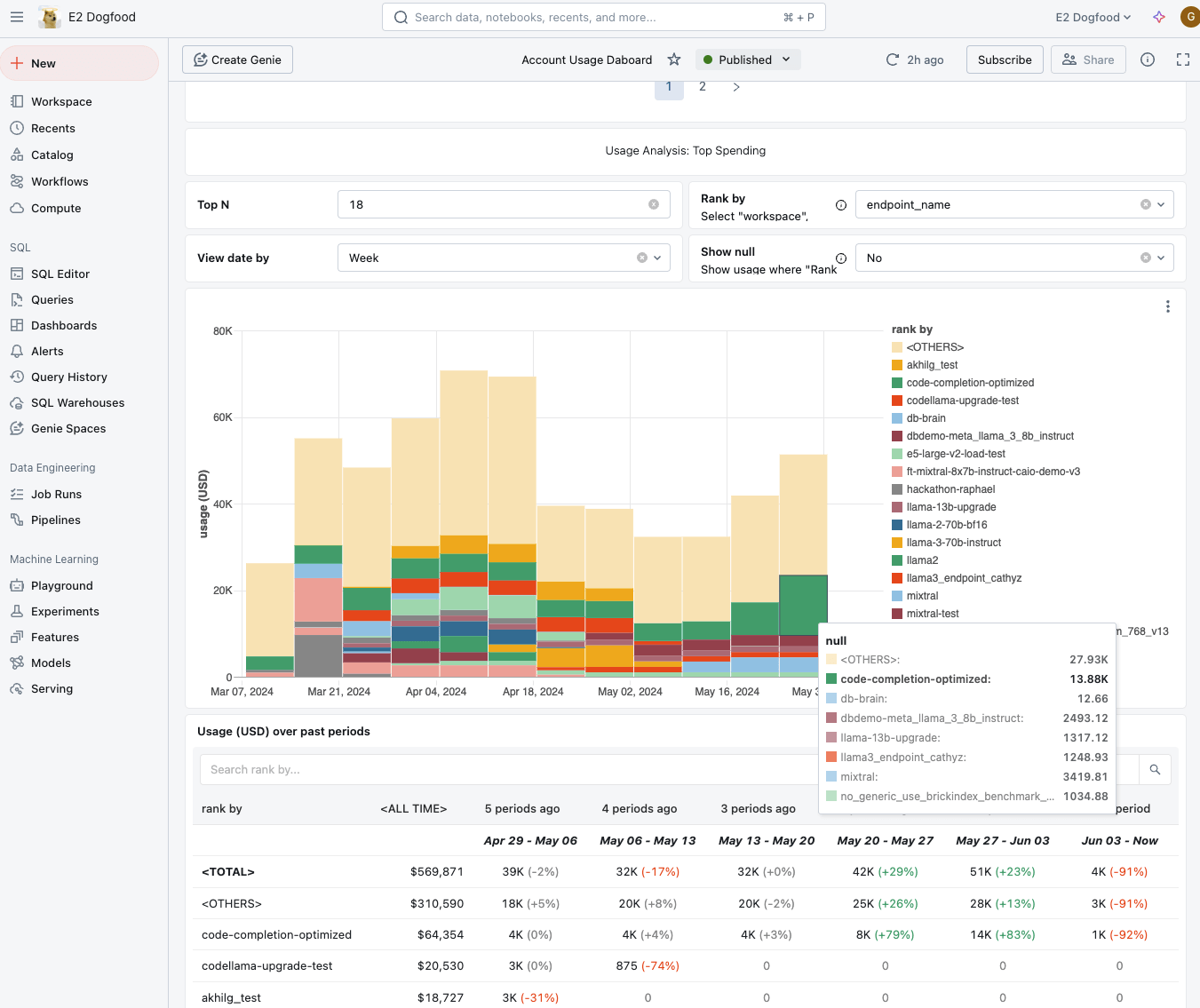

Price and Utilization Dashboard powered by AI/BI

To perceive your Databricks prices and determine costly workloads, we launched the brand new Price and Utilization Dashboard powered by AI/BI. With the dashboard, you may see the context of your spending and perceive which challenge your prices are originating from. Lastly, yow will discover your most costly jobs, clusters, and endpoints.

To make use of the dashboard, set them up within the Account Console. The dashboards can be found in AWS non-govcloud, Azure, and GCP. You personal and handle the dashboards, so customise them to suit your enterprise. To be taught extra about these dashboards in Public Preview, take a look at the documentation.

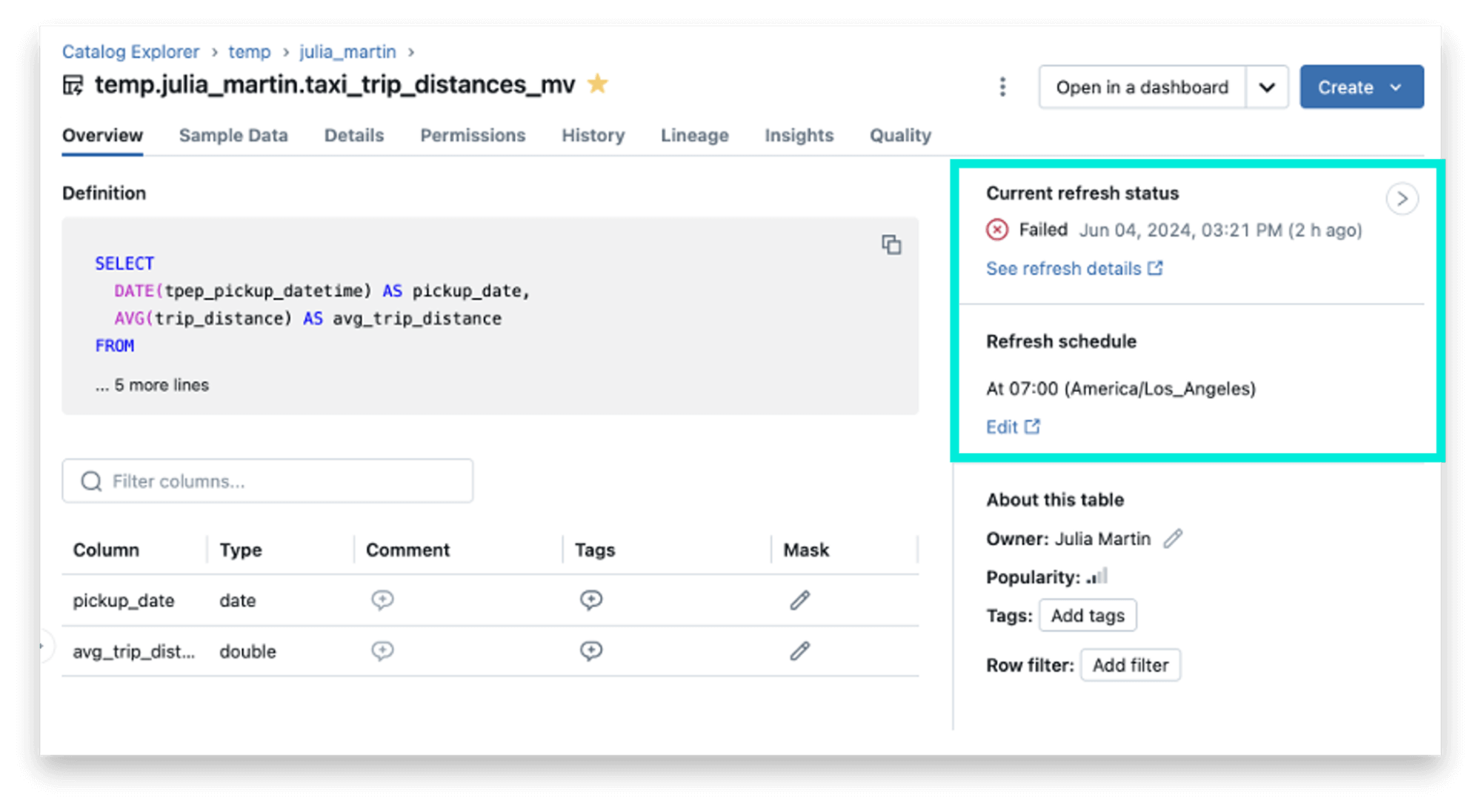

Materialized views and streaming tables

We’ve been speaking about materialized views and streaming tables for some time, as they’re an effective way to scale back prices and enhance question latency. (Enjoyable reality: materialized views have been first supported in Databricks with the launch of Delta Reside Tables.) These options at the moment are usually obtainable (woot), however we simply couldn’t assist ourselves. Now we have added new capabilities within the common availability launch, together with enhancing observability, scheduling, and value attribution.

- Observability: the catalog explorer consists of contextual, real-time details about the standing and schedule of materialized views and streaming tables.

- Scheduling: the EVERY syntax is now obtainable for scheduling materialized view and streaming desk refreshes utilizing DDL.

- Price attribution: the system tables can present you who’s refreshing materialized view and streaming tables.

To be taught extra about materialized views and streaming tables, see the weblog saying the common availability of materialized views and streaming tables in Databricks SQL.

Publish to Energy BI

Now, you may create semantic fashions from tables/schemas on Databricks and publish all of them on to Energy BI Service. Feedback on a desk’s columns are copied to the descriptions of corresponding columns in Energy BI.

To get began, see Publish to Energy BI On-line from Azure Databricks.

Integration with Information Intelligence Platform

These options for Databricks SQL are a part of the Databricks Information Intelligence Platform. Databricks SQL advantages from the platform’s capabilities of simplicity, unified governance, and openness of the lakehouse structure. The next are a couple of new platform options which might be particularly helpful for Databricks SQL.

Compute price range insurance policies

Compute price range insurance policies to assist handle and implement value allocation greatest practices for compute–no matter whether or not you’re doing interactive workloads, scheduled jobs, or occasion Delta Reside Tables.

Vector Search native assist in Databricks SQL

Vector databases and vector search use instances are multiplying. In Q3, we launched a gated Public Preview for Databricks SQL assist for Vector Search. This integration means you may name Databricks MosaicML Vector Search immediately from SQL. Now, anybody can use vector search to construct RAG functions, generate search suggestions, or energy analytics on unstructured knowledge.

vector_search() is now obtainable in Public Preview in areas the place Mosaic AI Vector Search is supported. For extra info, see vector_search perform.

Extra particulars on new improvements

We hope you take pleasure in this bounty of latest improvements in Databricks SQL. You may all the time examine this What’s New submit for the earlier three months. Under is an entire stock of launches we have blogged about during the last quarter:

As all the time, we proceed to work to convey you much more cool options. Keep tuned to the quarterly roadmap webinars to be taught what’s on the horizon for Information Warehousing and AI/BI. It is an thrilling time to be working with knowledge, and we’re excited to associate with Information Architects, Analysts, BI Analysts, and extra to democratize knowledge and AI inside your organizations!

To be taught extra about Databricks SQL, go to our web site or learn the documentation. It’s also possible to take a look at the product tour for Databricks SQL. Suppose you need to migrate your present warehouse to a high-performance, serverless knowledge warehouse with an awesome person expertise and decrease complete value. In that case, Databricks SQL is the answer — strive it without spending a dime.

To take part in personal previews or gated public previews, contact your Databricks account staff.