Generative AI fever exhibits no indicators of cooling off. As stress and pleasure construct to execute sturdy GenAI methods, knowledge leaders and practitioners are looking for the perfect platform, instruments and use circumstances to assist them get there.

How is that this taking part in out in the true world? We simply launched the 2024 State of Knowledge + AI, which leverages knowledge from our 10,000 international prospects, to grasp how organizations throughout industries are approaching AI. Whereas our report covers a broad vary of themes related to any data-driven firm, clear tendencies emerged on the GenAI journey.

Right here’s a snapshot of what we found:

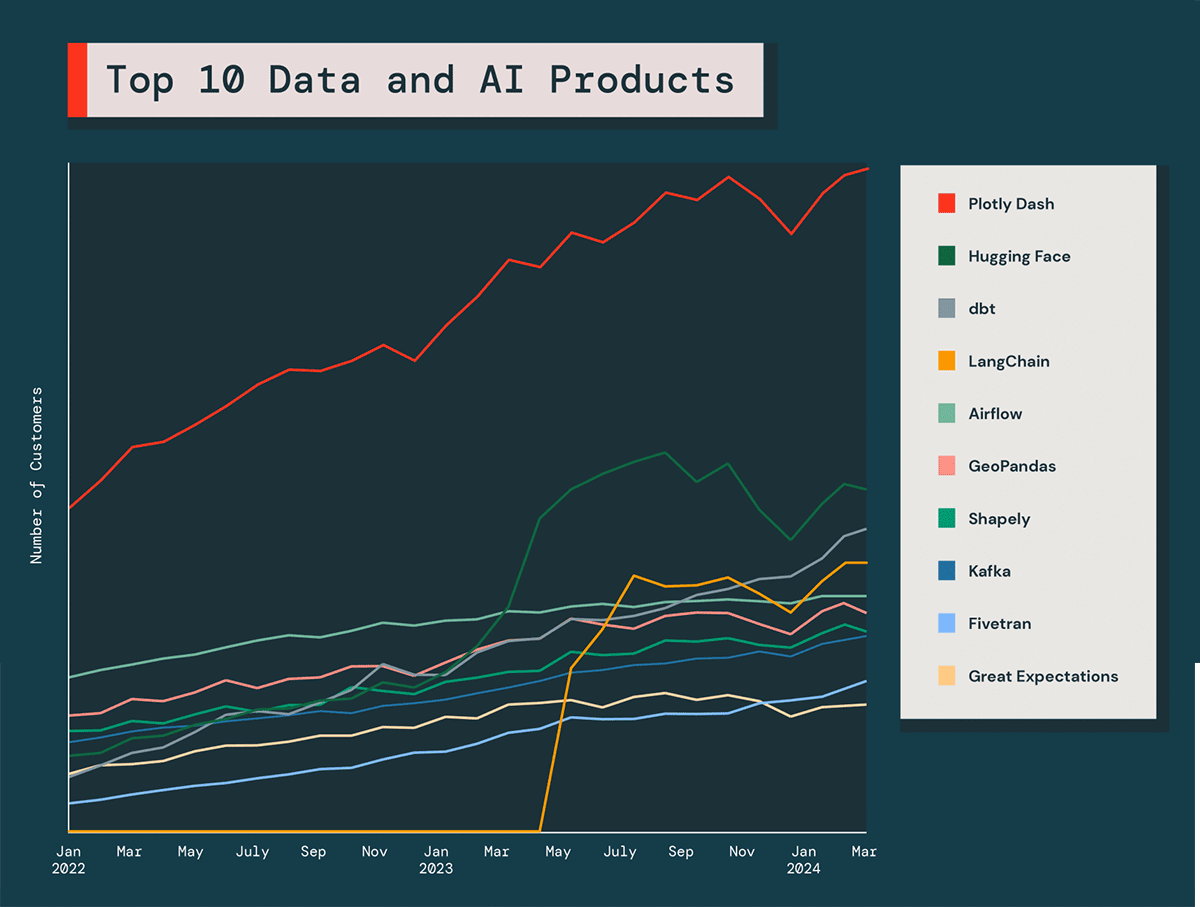

Prime 10 knowledge and AI merchandise: the GenAI Stack is forming

With any new know-how, builders will experiment with a lot of completely different instruments to determine what works greatest for them.

Our Prime 10 Knowledge and AI Merchandise showcase probably the most widely-adopted integrations on the Databricks Knowledge Intelligence Platform. From knowledge integration to mannequin improvement, this listing exhibits how corporations are investing of their stack to help new GenAI priorities:

Hugging Face transformers bounce to No. 2

In simply 12 months, Hugging Face jumps from spot #4 to identify #2. Many corporations use the open supply platform’s transformer fashions along with their enterprise knowledge to construct and fine-tune basis fashions.

LangChain turns into high product months after integration

LangChain, an open supply toolchain for working with and constructing proprietary LLMs, rose to identify #4 in lower than one 12 months of integration. When corporations construct their very own trendy LLM functions and work with specialised transformer-related Python libraries to coach the fashions, LangChain permits them to develop immediate interfaces or integrations to different techniques.

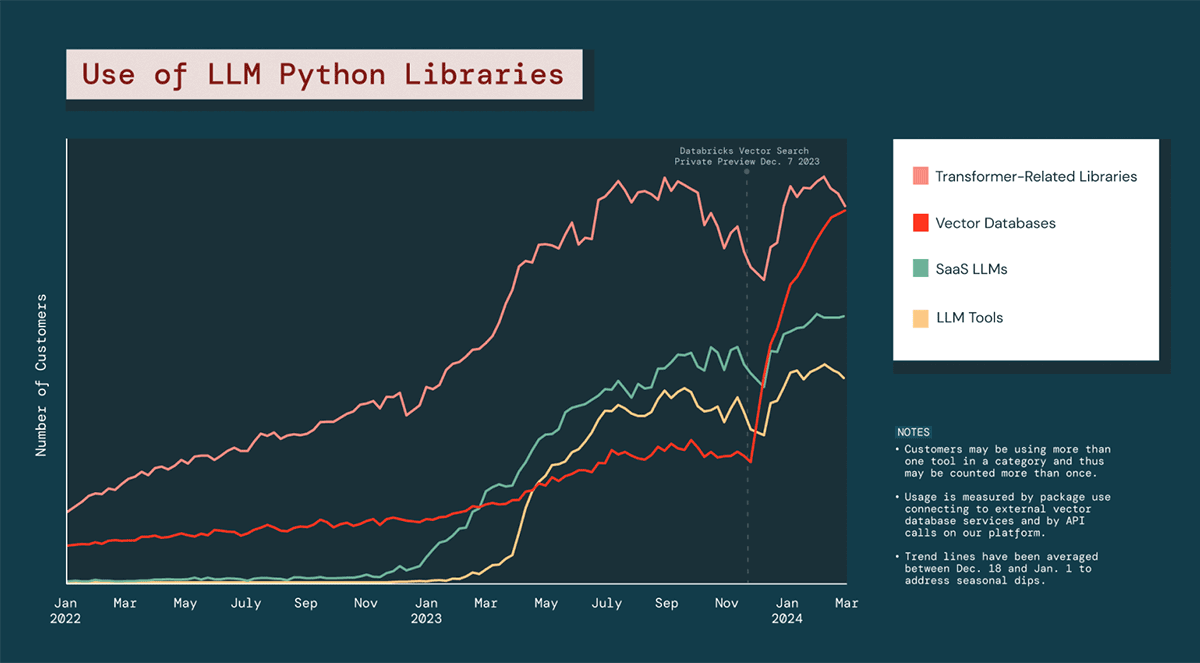

Enterprise GenAI is all about customizing LLMs

Final 12 months, our knowledge confirmed SaaS LLMs because the “it” instrument of 2023, when analyzing the preferred LLM Python libraries. This 12 months, our knowledge is displaying the usage of general- function LLMs proceed however with slowed year-over-year progress.

This 12 months’s technique has taken a significant shift. Our knowledge exhibits that corporations are hyper-focused on augmenting LLMs with their customized knowledge vs simply utilizing standalone off-the-shelf LLMs.

Companies need to harness the ability of SaaS LLMs, but in addition enhance the accuracy and mildew the underlying fashions to higher work for them. With RAG, corporations can use one thing like an worker handbook or their very own monetary statements so the mannequin can begin to generate outputs which can be particular to the enterprise. And there’s big demand throughout our prospects to construct these personalized techniques. The usage of vector databases, a significant part of RAG fashions, grew 377% within the final 12 months – together with a 186% bounce after Databricks Vector Search went into Public Preview.

The enterprise AI technique and open LLMs

As corporations construct their know-how stacks, open supply is making its mark. In reality, 9 of the ten high merchandise are open supply, together with our two massive GenAI gamers: Hugging Face and LangChain.

Open-source LLMs additionally provide many enterprise advantages, reminiscent of the power to customise them to your group’s distinctive wants and use circumstances. We analyzed the open supply mannequin utilization of Meta Llama and Mistral, the 2 greatest gamers, to grasp which fashions corporations gravitated towards.

With every mannequin, there’s a trade-off between price, latency and efficiency. Collectively, utilization of the 2 smallest Meta Llama 2 fashions (7B and 13B) is considerably greater than the biggest, Meta Llama 2 70B.

Throughout each Llama and Mistral customers, 77% select fashions with 13B parameters or fewer. This implies that corporations care considerably about price and latency.

Dive deeper into these and different tendencies within the 2024 State of Knowledge + AI. Contemplate it your playbook for an efficient knowledge and AI technique. Obtain the total report right here.