On the 2025 CES occasion, Nvidia introduced a brand new $3000 desktop laptop developed in collaboration with MediaTek, which is powered by a brand new cut-down Arm-based Grace CPU and Blackwell GPU Superchip. The brand new system known as “challenge DIGITS” (to not be confused with the Nvidia The Deep Studying GPU Coaching System: DIGITS). The platform presents a sequence of latest capabilities for each the AI and HPC markets.

Challenge DIGITS options the brand new Nvidia GB10 Grace Blackwell Superchip with 20 Arm cores and is designed to supply a “petaflop” (at FP4 precision) of GPU-AI computing efficiency for prototyping, fine-tuning and working massive AI fashions. (Necessary floating level explainer could also be useful right here.)

For the reason that launch of the G8x line of video playing cards (2006), Nvidia has carried out a superb job of offering CUDA instruments and libraries accessible throughout the whole line of GPUs. The flexibility to make use of a low-cost buyer video card for CUDA growth has helped create a vibrant ecosystem of purposes. Because of the price and shortage of performant GPUs, the DIGITS challenge ought to allow extra LLM-based software program growth. Like a low-cost GPU, the power to run, configure, and fine-tune open transformer fashions (e.g., llama) on a desktop needs to be enticing to builders. For instance, by providing 128GB of reminiscence, the DIGITS system will assist overcome the 24GB limitation on many lower-cost client video playing cards.

Scant Specs

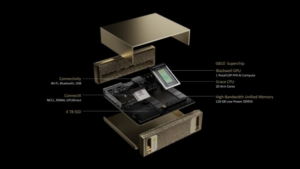

The brand new GB10 Superchip options an Nvidia Blackwell GPU with latest-generation CUDA cores and fifth-generation Tensor Cores, related by way of NVLink-C2C chip-to-chip interconnect to a high-performance Nvidia Grace-like CPU, which incorporates 20 power-efficient Arm cores (ten Arm Cortex-X925 and ten Cortex-A725 CPU cores . Although no specs had been accessible, the GPU facet of the GB10 is assumed to supply much less efficiency than the Grace-Blackwell GB200. To be clear; the GB10 just isn’t a binned or laser trimmed GB200. The GB200 Superchip has 72 Arm Neoverse V2 cores mixed with two B200 Tensor Core GPUs.

The defining characteristic of the DIGITS system is the 128GB (LPDDR5x) of unified, coherent reminiscence between CPU and GPU. This reminiscence measurement breaks a “GPU reminiscence barrier” when working AI or HPC fashions on GPUs; as an example, present market costs for the 80GB Nvidia A100 differ from $18,000 to $20,000. With unified, coherent reminiscence, PCIe transfers between CPU and GPU are additionally eradicated. The rendering within the picture under signifies that the quantity of reminiscence is fastened and can’t be expanded by the consumer. The diagram additionally signifies that ConnectX networking (Ethernet?), Wifi, Bluetooth, and USB connections can be found.

The system additionally offers as much as 4TB of NVMe storage. When it comes to energy, Nvidia mentions a regular electrical outlet. There are not any particular energy necessities, however the measurement and design might give just a few clues. First, just like the Mac mini methods, the small measurement (see Determine 2) signifies that the quantity of generated warmth should not be that prime. Second, primarily based on the pictures from the CES present flooring, no fan vents or cutouts exist. The back and front of the case appear to have a sponge-like materials that would present air circulate and will function entire system filters. Since warmth design signifies energy and energy signifies efficiency, the DIGITS system might be not a screamer tweaked for optimum efficiency (and energy utilization), however slightly a cool, quiet, and proficient AI desktop system with an optimized reminiscence structure.

As talked about, the system is extremely small. The picture under presents some perspective towards a keyboard and monitor (There are not any cables proven. In our expertise, a few of these small methods can get pulled off the desktop by the cable weight.)

AI on the desktop

Nvidia reviews that builders can run as much as 200-billion-parameter massive language fashions to supercharge AI innovation. As well as, utilizing Nvidia ConnectX networking, two Challenge DIGITS AI supercomputers might be linked to run as much as 405-billion-parameter fashions. With Challenge DIGITS, customers can develop and run inference on fashions utilizing their personal desktop system, then seamlessly deploy the fashions on accelerated cloud or knowledge middle infrastructure.

“AI will likely be mainstream in each software for each trade. With Challenge DIGITS, the Grace Blackwell Superchip involves tens of millions of builders,” stated Jensen Huang, founder and CEO of Nvidia. “Inserting an AI supercomputer on the desks of each knowledge scientist, AI researcher, and scholar empowers them to interact and form the age of AI.”

These methods usually are not supposed for coaching however are designed to run quantized LLMs regionally (scale back the precision measurement of the mannequin weights). The quoted one petaFLOP efficiency quantity from Nvidia is for FP4 precision weights (4 bits, or 16 potential numbers)

Many fashions can run adequately at this stage, however quantization might be elevated to FP8, FP16, or greater for probably higher outcomes relying on the dimensions of the mannequin and the accessible reminiscence. As an illustration, utilizing FP8 precision weights for a Llama-3-70B mannequin requires one byte per parameter or roughly 70GB of reminiscence. Halving the precision to FP4 will reduce that right down to 35GB of reminiscence, however growing to FP32 would require 140GB, which is larger than the DIGITS system presents.

HPC cluster anybody?

What will not be broadly recognized is that the DIGITS just isn’t the primary desk-side Nvidia system. In 2024, GPTshop.ai launched a GH200-based desk-side system. HPCwire supplied protection that included HPC benchmarks. Not like the DIGITS challenge, the GPTshop methods present the complete heft of both the GH200 Grace-Hopper Superchip and GB200 Grace-Blackwell Superchip in a desk-side case. The elevated efficiency additionally comes with a better price.

Utilizing the DIGITS Challenge methods for desktop HPC could possibly be an fascinating method. Along with working bigger AI fashions, the built-in CPU-GPU international reminiscence might be very helpful to HPC purposes. Think about a latest HPCwire story about CFD software working solely on Intel two Xeon 6 Granite Rapids processors (no GPU). Based on writer Dr. Moritz Lehmann, the enabling issue for the simulation was the quantity of reminiscence he was in a position to make use of for his simulation.

In a similar way, many HPC purposes have needed to discover methods to get across the small reminiscence domains of widespread PCIe-attached video playing cards. Utilizing a number of playing cards or MPI helps unfold out the appliance, however probably the most enabling consider HPC is all the time extra reminiscence.

In fact, benchmarks are wanted to find out the suitability of the DIGITS Challenge totally for desktop HPC, however there’s one other chance: “construct a Beowulf cluster of those.” Usually thought-about a little bit of a joke, this phrase could also be a bit extra severe relating to the DIGITS challenge. In fact, clusters are constructed with servers and (a number of) PCEe-attached GPU playing cards. Nevertheless, a small, reasonably powered, totally built-in international reminiscence CPU-GPU may make for a extra balanced and enticing cluster constructing block. And right here is the bonus: they already run Linux and have built-in ConnectX networking.

Associated Objects:

Nvidia Touts Decrease ‘Time-to-First-Prepare’ with DGX Cloud on AWS

Nvidia Introduces New Blackwell GPU for Trillion-Parameter AI Fashions

NVIDIA Is More and more the Secret Sauce in AI Deployments, However You Nonetheless Want Expertise

Editor’s notice: This story first appeared in HPCwire.