There are two main issues with distributed knowledge methods. The second is out-of-order messages, the primary is duplicate messages, the third is off-by-one errors, and the primary is duplicate messages.

This joke impressed Rockset to confront the info duplication subject via a course of we name deduplication.

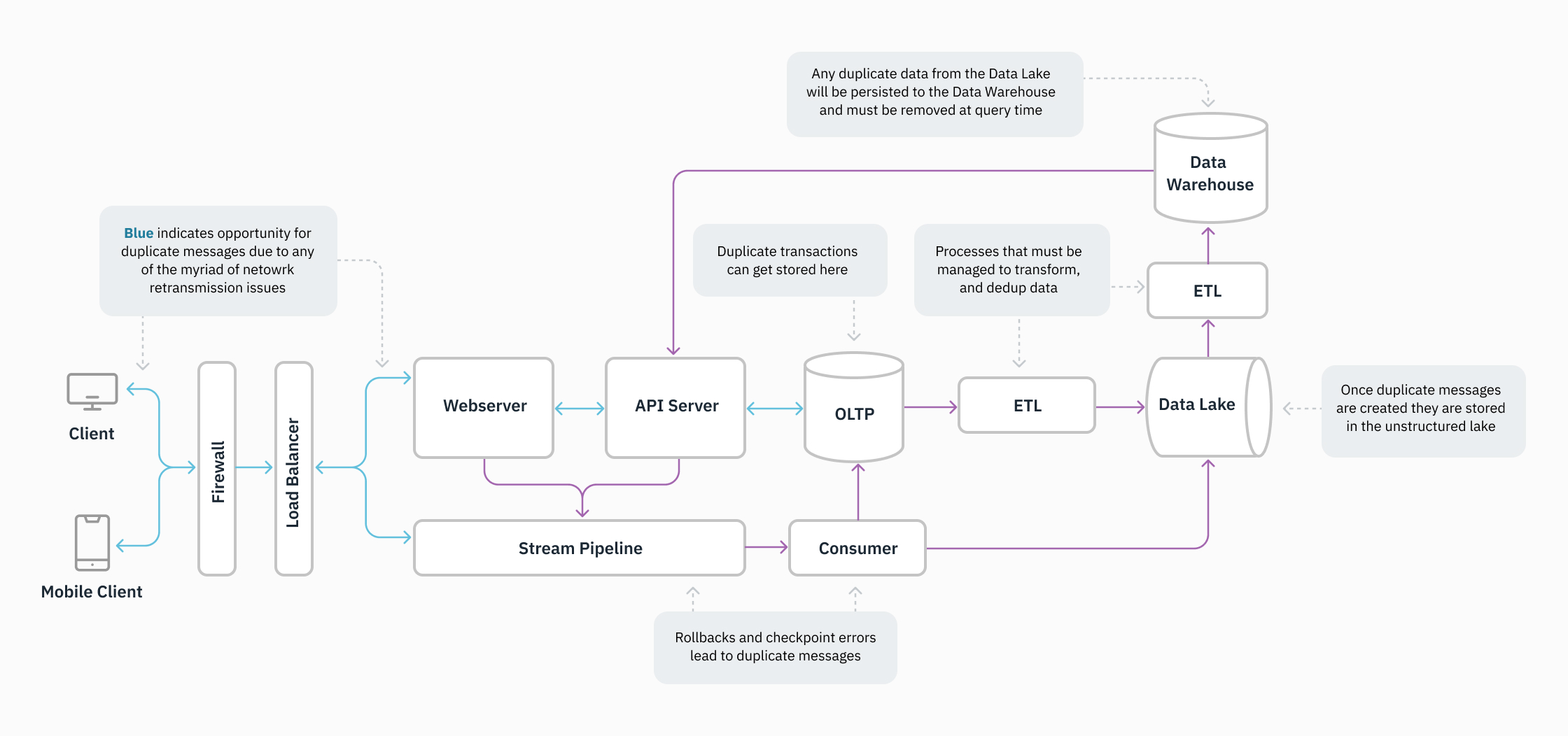

As knowledge methods change into extra complicated and the variety of methods in a stack will increase, knowledge deduplication turns into more difficult. That is as a result of duplication can happen in a mess of how. This weblog put up discusses knowledge duplication, the way it plagues groups adopting real-time analytics, and the deduplication options Rockset supplies to resolve the duplication subject. At any time when one other distributed knowledge system is added to the stack, organizations change into weary of the operational tax on their engineering crew.

Rockset addresses the difficulty of knowledge duplication in a easy manner, and helps to free groups of the complexities of deduplication, which incorporates untangling the place duplication is happening, establishing and managing extract remodel load (ETL) jobs, and trying to unravel duplication at a question time.

The Duplication Downside

In distributed methods, messages are handed backwards and forwards between many employees, and it’s frequent for messages to be generated two or extra instances. A system could create a replica message as a result of:

- A affirmation was not despatched.

- The message was replicated earlier than it was despatched.

- The message affirmation comes after a timeout.

- Messages are delivered out of order and have to be resent.

The message may be obtained a number of instances with the identical data by the point it arrives at a database administration system. Due to this fact, your system should make sure that duplicate data aren’t created. Duplicate data may be expensive and take up reminiscence unnecessarily. These duplicated messages have to be consolidated right into a single message.

Deduplication Options

Earlier than Rockset, there have been three normal deduplication strategies:

- Cease duplication earlier than it occurs.

- Cease duplication throughout ETL jobs.

- Cease duplication at question time.

Deduplication Historical past

Kafka was one of many first methods to create an answer for duplication. Kafka ensures {that a} message is delivered as soon as and solely as soon as. Nevertheless, if the issue happens upstream from Kafka, their system will see these messages as non-duplicates and ship the duplicate messages with totally different timestamps. Due to this fact, precisely as soon as semantics don’t at all times remedy duplication points and may negatively influence downstream workloads.

Cease Duplication Earlier than it Occurs

Some platforms try and cease duplication earlier than it occurs. This appears very best, however this technique requires tough and expensive work to determine the situation and causes of the duplication.

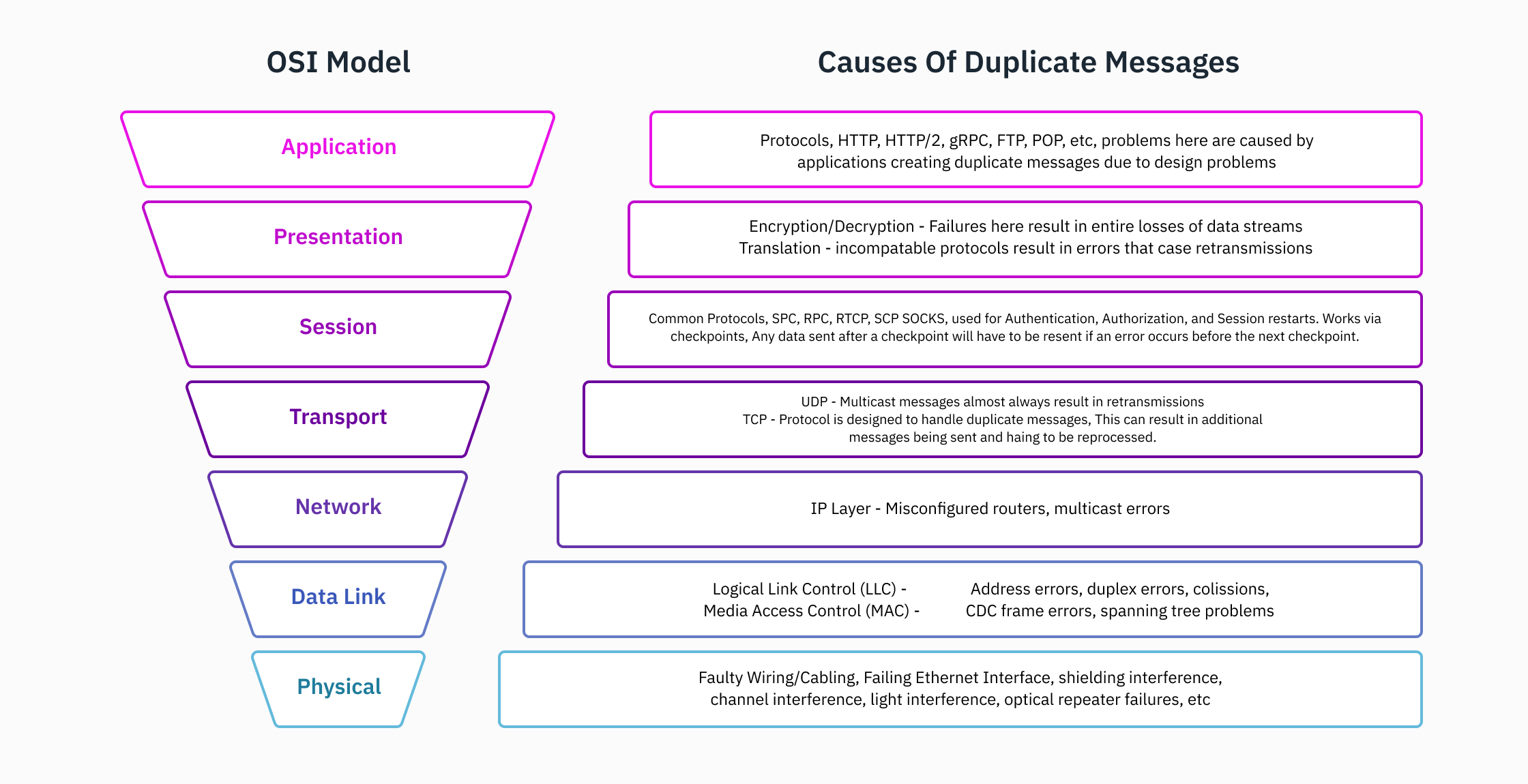

Duplication is usually brought on by any of the next:

- A change or router.

- A failing client or employee.

- An issue with gRPC connections.

- An excessive amount of visitors.

- A window measurement that’s too small for packets.

Word: Take into account this isn’t an exhaustive checklist.

This deduplication strategy requires in-depth information of the system community, in addition to the {hardware} and framework(s). It is vitally uncommon, even for a full-stack developer, to know the intricacies of all of the layers of the OSI mannequin and its implementation at an organization. The info storage, entry to knowledge pipelines, knowledge transformation, and software internals in a corporation of any substantial measurement are all past the scope of a single particular person. Consequently, there are specialised job titles in organizations. The power to troubleshoot and determine all areas for duplicated messages requires in-depth information that’s merely unreasonable for a person to have, or perhaps a cross-functional crew. Though the associated fee and experience necessities are very excessive, this strategy affords the best reward.

Cease Duplication Throughout ETL Jobs

Stream-processing ETL jobs is one other deduplication technique. ETL jobs include extra overhead to handle, require extra computing prices, are potential failure factors with added complexity, and introduce latency to a system doubtlessly needing excessive throughput. This entails deduplication throughout knowledge stream consumption. The consumption shops may embrace making a compacted subject and/or introducing an ETL job with a standard batch processing instrument (e.g., Fivetran, Airflow, and Matillian).

To ensure that deduplication to be efficient utilizing the stream-processing ETL jobs technique, you have to make sure the ETL jobs run all through your system. Since knowledge duplication can apply anyplace in a distributed system, making certain architectures deduplicate in every single place messages are handed is paramount.

Stream processors can have an lively processing window (open for a selected time) the place duplicate messages may be detected and compacted, and out-of-order messages may be reordered. Messages may be duplicated if they’re obtained outdoors the processing window. Moreover, these stream processors have to be maintained and may take appreciable compute sources and operational overhead.

Word: Messages obtained outdoors of the lively processing window may be duplicated. We don’t advocate fixing deduplication points utilizing this technique alone.

Cease Duplication at Question Time

One other deduplication technique is to try to unravel it at question time. Nevertheless, this will increase the complexity of your question, which is dangerous as a result of question errors may very well be generated.

For instance, in case your answer tracks messages utilizing timestamps, and the duplicate messages are delayed by one second (as a substitute of fifty milliseconds), the timestamp on the duplicate messages is not going to match your question syntax inflicting an error to be thrown.

How Rockset Solves Duplication

Rockset solves the duplication drawback via distinctive SQL-based transformations at ingest time.

Rockset is a Mutable Database

Rockset is a mutable database and permits for duplicate messages to be merged at ingest time. This method frees groups from the numerous cumbersome deduplication choices lined earlier.

Every doc has a novel identifier referred to as _id that acts like a major key. Customers can specify this identifier at ingest time (e.g. throughout updates) utilizing SQL-based transformations. When a brand new doc arrives with the identical _id, the duplicate message merges into the present document. This affords customers a easy answer to the duplication drawback.

If you deliver knowledge into Rockset, you’ll be able to construct your individual complicated _id key utilizing SQL transformations that:

- Establish a single key.

- Establish a composite key.

- Extract knowledge from a number of keys.

Rockset is totally mutable with out an lively window. So long as you specify messages with _id or determine _id inside the doc you might be updating or inserting, incoming duplicate messages will likely be deduplicated and merged collectively right into a single doc.

Rockset Permits Knowledge Mobility

Different analytics databases retailer knowledge in fastened knowledge constructions, which require compaction, resharding and rebalancing. Any time there’s a change to present knowledge, a serious overhaul of the storage construction is required. Many knowledge methods have lively home windows to keep away from overhauls to the storage construction. Consequently, when you map _id to a document outdoors the lively database, that document will fail. In distinction, Rockset customers have a whole lot of knowledge mobility and may replace any document in Rockset at any time.

A Buyer Win With Rockset

Whereas we have spoken in regards to the operational challenges with knowledge deduplication in different methods, there’s additionally a compute-spend component. Making an attempt deduplication at question time, or utilizing ETL jobs may be computationally costly for a lot of use instances.

Rockset can deal with knowledge adjustments, and it helps inserts, updates and deletes that profit finish customers. Right here’s an nameless story of one of many customers that I’ve labored carefully with on their real-time analytics use case.

Buyer Background

A buyer had a large quantity of knowledge adjustments that created duplicate entries inside their knowledge warehouse. Each database change resulted in a brand new document, though the shopper solely needed the present state of the info.

If the shopper needed to place this knowledge into a knowledge warehouse that can’t map _id, the shopper would’ve needed to cycle via the a number of occasions saved of their database. This contains working a base question adopted by extra occasion queries to get to the most recent worth state. This course of is extraordinarily computationally costly and time consuming.

Rockset’s Resolution

Rockset supplied a extra environment friendly deduplication answer to their drawback. Rockset maps _id so solely the most recent states of all data are saved, and all incoming occasions are deduplicated. Due to this fact the shopper solely wanted to question the most recent state. Due to this performance, Rockset enabled this buyer to scale back each the compute required, in addition to the question processing time — effectively delivering sub-second queries.

Rockset is the real-time analytics database within the cloud for contemporary knowledge groups. Get sooner analytics on brisker knowledge, at decrease prices, by exploiting indexing over brute-force scanning.